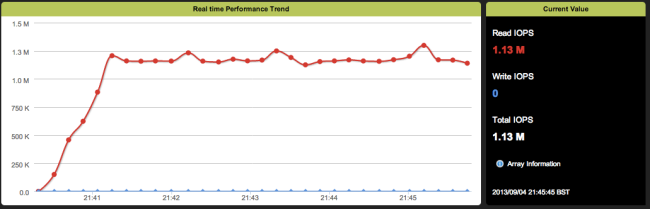

I got my hands on a nice new server (20x 3GBZ ivy Bridge v2 cores) and managed to connect to some nice Violin Memory Flash capacity.

With nearly no tuning I kicked off some workload and was quite pleased with the results.

Some of the best vdbench results I have seen for FC connected persistent storage, from a single dual socket server, or from a 6.x linux.

[root@dell-oel6 ~]# uname -a

Linux dell-oel6 3.8.13-16.2.1.el6uek.x86_64 #1 SMP Thu Nov 7 17:01:44 PST 2013 x86_64 x86_64 x86_64 GNU/Linux

[root@dell-oel6 ~]# vi tom.config

100% Reads

[root@dell-oel6 ~]# ./vdbench -f tom.config

11:23:13.740 input argument scanned: ‘-ftom.config’

11:23:14.163 All slaves are now connected

11:23:15.002 Starting RD=run3; I/O rate: Uncontrolled MAX; elapsed=60; For loops: threads=32.0

Oct 17, 2014 interval i/o MB/sec bytes read resp resp resp cpu% cpu%

rate 1024**2 i/o pct time max stddev sys+usr sys

11:23:25.129 1 1020533.40 3986.46 4096 70.01 0.901 1765.584 3.901 49.1 41.9

11:23:35.080 2 1034747.00 4041.98 4096 70.00 0.896 1898.664 4.106 49.6 45.4

11:23:45.109 3 1035505.40 4044.94 4096 70.00 0.895 2030.101 4.073 49.9 45.7

11:23:55.104 4 1036199.80 4047.66 4096 70.00 0.894 1828.606 4.124 49.5 45.4

11:24:05.102 5 1035522.00 4045.01 4096 69.99 0.895 2310.299 4.058 49.4 45.3

11:24:15.099 6 1036075.00 4047.17 4096 69.99 0.894 2030.939 4.003 49.3 45.2

11:24:15.108 avg_2-6 1035609.84 IOPS 4045.35 MB/sec 4096 70.00 0.895 2310.299 4.073 49.6 45.4

11:24:19.157 Vdbench execution completed successfully. Output directory: /root/output

50/50 r/w 64k IO

[root@dell-oel6 ~]# ./vdbench -f tom.config

11:35:06.298 input argument scanned: ‘-ftom.config’

11:35:06.716 All slaves are now connected

11:35:08.001 Starting RD=run3; I/O rate: Uncontrolled MAX; elapsed=60; For loops: threads=17.0

Oct 17, 2014 interval i/o MB/sec bytes read resp resp resp cpu% cpu%

rate 1024**2 i/o pct time max stddev sys+usr sys

11:35:18.055 1 112100.90 7006.31 65536 49.98 4.359 1322.165 9.009 7.3 3.7

11:35:28.066 2 113141.60 7071.35 65536 50.07 4.372 2020.149 8.952 4.5 3.9

11:35:38.063 3 112481.10 7030.07 65536 50.01 4.380 1336.651 8.843 4.4 3.9

11:35:48.058 4 112552.10 7034.51 65536 49.98 4.381 1819.720 9.119 4.4 3.9

11:35:58.058 5 112498.50 7031.16 65536 49.92 4.380 1706.458 8.902 4.4 3.9

11:36:08.058 6 112585.90 7036.62 65536 50.00 4.379 1386.934 9.135 4.2 3.8

11:36:08.066 avg_2-6 112651.84 IOPS 7040.74 MB/s 65536 50.00 4.378 2020.149 8.991 4.4 3.9

11:36:10.710 Vdbench execution completed successfully. Output directory: /root/output

100% writes

[root@dell-oel6 ~]# ./vdbench -f tom.config

11:40:42.487 All slaves are now connected

11:40:44.001 Starting RD=run3; I/O rate: Uncontrolled MAX; elapsed=60; For loops: threads=37.0

Oct 17, 2014 interval i/o MB/sec bytes read resp resp resp cpu% cpu%

rate 1024**2 i/o pct time max stddev sys+usr sys

11:40:54.144 1 983854.00 3843.18 4096 0.00 1.075 1276.008 4.268 45.9 38.5

11:41:04.111 2 998762.80 3901.42 4096 0.00 1.075 1276.465 4.137 47.6 43.5

11:41:14.107 3 996183.40 3891.34 4096 0.00 1.076 1265.410 4.287 47.4 43.3

11:41:24.072 4 996273.50 3891.69 4096 0.00 1.076 1290.658 4.218 47.1 43.1

11:41:34.071 5 995814.10 3889.90 4096 0.00 1.076 1319.793 4.148 46.7 42.8

11:41:44.066 6 993638.60 3881.40 4096 0.00 1.079 1236.961 4.188 47.1 43.1

11:41:44.074 avg_2-6 996134.48 IOPS 3891.15 MB per sec 4096 0.00 1.076 1319.793 4.196 47.2 43.1

11:41:47.986 Vdbench execution completed successfully. Output directory: /root/output